Community-learned Language Models

With help of Sameera Mudgal and other collaborators, we have shared a proposal to extend the ideas of Sun Shines Bright to the community.

Embracing grounding as a sharing and learning experience for the community over making and hacking.

Sun Shines Bright reimagines our relationship with AI and the cloud by demystifying and DIY-ing local and self-hosted AI servers, and cultivating community-led AI systems. The project proposes that grounding technology is as much about building agency and accessibility of usage for diverse creator communities as it is about the materiality of systems to build it. We propose that to “ground the cloud”, we need to embody the principles of care and camaraderie in our technological interventions by:

- Creating community, agency and accessibility to use complex AI systems

- Making and hacking simple physical AI infrastructures with solar energy

- Nurturing home-grown, p2p AI servers

- Collecting and training our own Small Language Models that reflect our values and voices

Rather than naively offering alternative prototypes and end products for one-time use and showcase, we propose simple, radical ways of community building and facilitation that respond with deep, situated and diverse knowledge. We create access, agency, control and understanding of complex AI systems which, when combined with inexpensive and easy-to-use hardware, create potent critical and long-term alternatives to invasive, biased and blackbox systems. We see this effort aligned with the idea of the Solar Mamas of the Barefoot College, India, where rural women were trained in solar electrification of their villages, empowering themselves and their extended community. We envision our project having a similar ripple effect - a joyous space for knowledge building and care-forward tinkering with technology.

Premise and Urgent Context

Contemporary life is inextricably linked to massively scaled AI systems trained on biased datasets and hosted in energy-intensive data centers. As an alternative, can community-learned, solar-powered small language models create new forms of technological intimacy that resist extraction while fostering care?

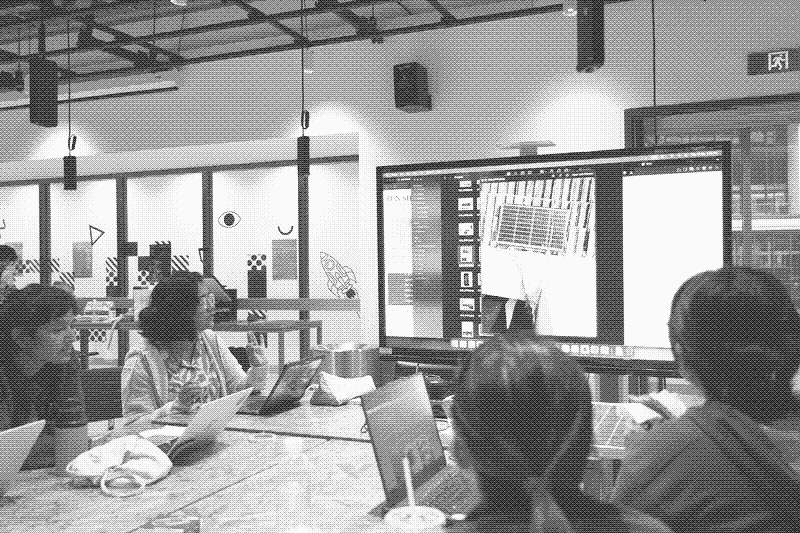

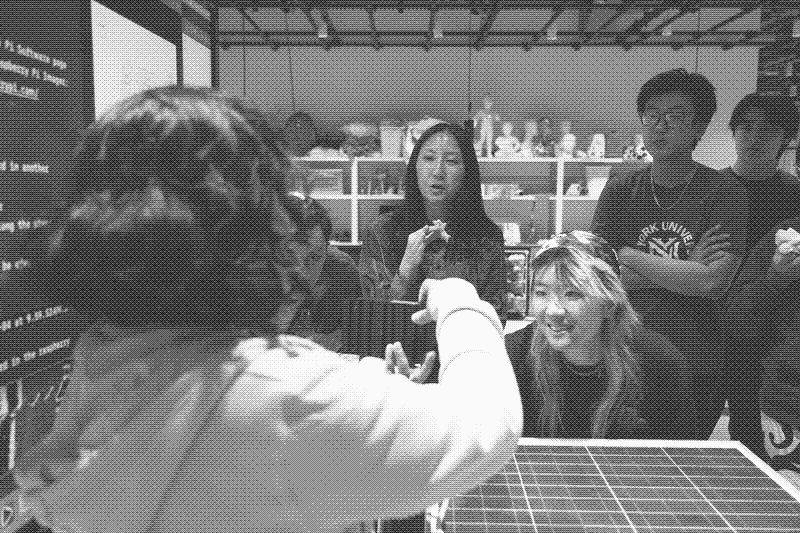

Inspired by Solar Protocol, which they call ‘A Naturally Intelligent Network’, Sun Shines Bright’s proof of concept simultaneously explores low-energy and sustainable model hosting and opportunities and efficacies of Small Language Models. The early experiments (Jan-Mar 2025) and subsequent workshop at NYU Shanghai (April 2025) proved that building a simple solar-powered server for hosting AI models is not only possible but can be easily shared and explored with students and creatives from non-technology backgrounds. This was followed up by an exhibit and zine about Sun Shines Bright at AIxDESIGN Festival: On Slow AI, May 2025 and Chaos Feminist Convention July 2025. Several feminist non-technologist creators have expressed their interest in building their own solar servers. This reflects an urgent need for:

- agency over the mainstream high-power black-boxed AI systems

- dissociation and simple solutions from current cloud-based models,

These models are climatically problematic and also do not reflect critical feminist non-white data. Now more than ever, we need camaraderie, friendship and intimacy as pillars of building agency and ease around fast-paced complex AI systems.

Ollama on Docker

I started out by assuming that I would need to run ollama on docker too. Which was neatly described here:

https://ollama.com/blog/ollama-is-now-available-as-an-official-docker-image

docker run -d -v ollama:/root/.ollama -p 11434:11434 --name ollama ollama/ollama

If all goes well go to:

http://<<rpiname>>.local:11434/

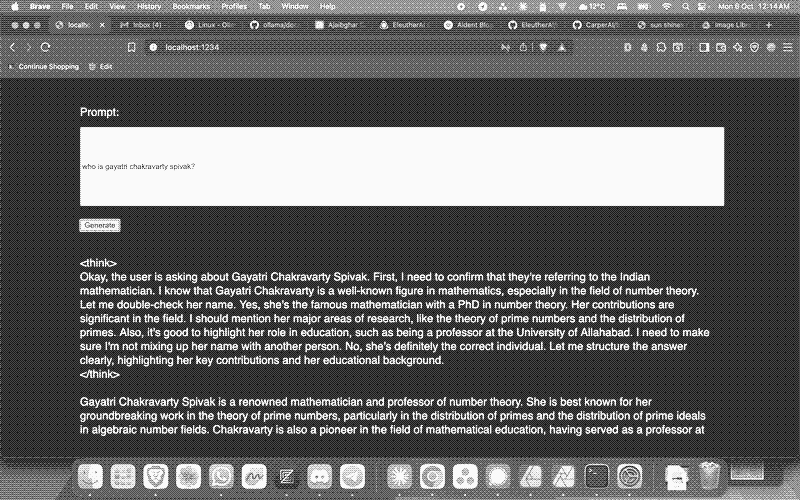

You should see a simple message “Ollama is running”. This ran fine in the terminal, and then I also just straight up installed Ollama on the server. I don’t know if this was a wise choice but I just did it! :) The linux install documentation was very simple, and I think running any LLM applications from Ollama directly made sense to me. Especially if I want to try out some non-chat related projects like I have with Archive of Lost Mothers.

This meant I have to think about an alternative to the bundled Ollama and OpenWebUI docker thing.

Yay!

Eventually I found that I was able to run it, with adding the --network=host flag, more here about it.

The code I ran:

docker run -d --network=host -v open-webui:/app/backend/data -e OLLAMA_BASE_URL=http://127.0.0.1:11434 --name open-webui --restart always ghcr.io/open-webui/open-webui:main

This worked fine and I was able to get the elegant OpenWebUI running on https://solarchat.cmama.xyz (this link won’t work without a login provided by me - I don’t want you to burn up my RPI!) Ping me if you want to test it. Ofcourse the computation for the webUI, and general limitation of the compute power means that the responses are slow.

Next Steps

On my to-dos with this experiment are:

-

Running tinyllama with RAG, just like my Gooey Copilot - Measuring Silences

-

Test fine-tuning of Tinyllama to run Measuring Silences (or similar) without RAG (i have never finetuned an LLM and I think this will make for an interesting project).

-

After testing OpenWebUI, I realised that I’m spending a lot of time trying to make the model and even this blog light and efficient to use. So I’m looking for or considering building a super barebones chat that won’t waste resources on the UI, yet not look gross!

Self-hosting and perils beyond

Last September, my colleague Kaustubh gave a wonderful talk about how he is self-hosting from an old laptop. He spoke very excitedly about his intentions with it, and how he achieved everything. Kaustabh technical know-how is exceptional, and he communicated the idea very simply.

It finally gave me a sense of what people say when they use the term “server”. I think it never struck me that it quite literally means “to serve”. So the server, serves websites or other computatational things!

After the talk, I was super excited and wondering what it might mean to host my own server, and if I could potentially do it with a rapsberry pi 4 that is lying around!

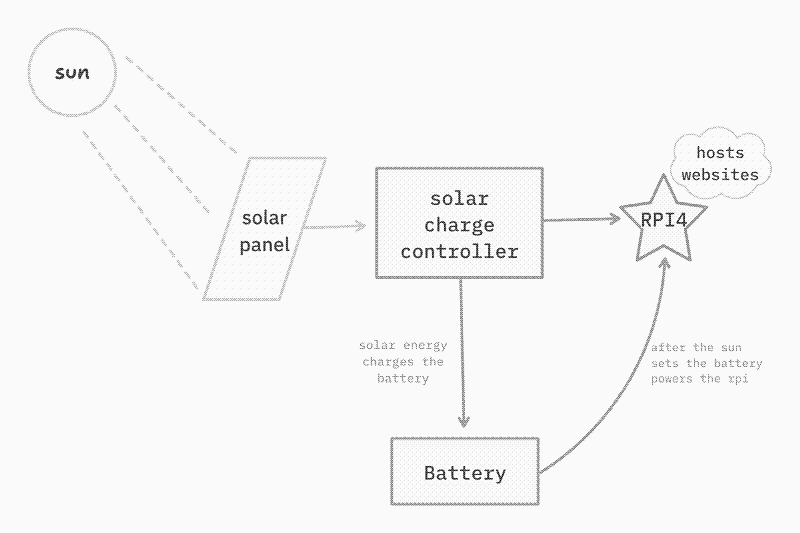

Solar dreams

In Oct/Nov 2024, I sat in on a info session from Rhizome.org, where a speaker explained a long standing project called Solar Protocol. This also hosts simple websites on a raspberry pi - the amazing part is that they use Solar power to keep everything running!

My combined excitement of selfhosting, raspberry pis and solar power source has kicked in hard in 2025 and this post is just a long form of things I did.

Yunohost and ISP woes!

After digging through basic resources like r/selfhosted and other lists. I found the documentation for Yunohost (pronounced Y-U-No-Host), very simple and easy to try. There was no code required for the setup. While I can code some and SSH into raspberry pis, I find that if the setup is easy, its a format/tool that I can share with others who aren’t technologists. To me arming with understanding technology makers is always as exhilirating learning something new myself.

Sure enough, this a simple image flash, YUNOHOST was installed and the admin panel was running as a browser interface!

The diagnostics tool told me that everything was looking fine aside from my port forwarding.

After some more youtube-ing, and weird bumps on the way, I was able to get all the port forwarding details in place. But nothing worked!

I called my ISP team, and they assured me it wasn’t on their end and all the ports were open! So I struggled a bit more, searching through the internet and youtube!

In the searches, two things kept coming back:

- A playlist by Pi-hosted which uses RaspiOS+portainer.io (a docker something) and,

- “Tunnels” instead of port forwarding

I kept resisting the solutions trying my best to get Yunohost up and running. Finally the ISP connected me to their senior networking engineer, and he explained that I have to buy the public static IP. This service is 2.5x the cost in Udaipur (perhaps as the ISP is local this request is not as common).

After a quick chat with Kaustubh about this, I decided to try a tunnel with Cloudflare, again I could’nt get this to work with Yunohost.

Apparently, Yunohost once installed and running with portforwarding will split the use of the localhost and the dedicated domain in two parts:

- the localhost will serve only as admin interface

- the domain will serve the frontend/dashboard for your services

Back to drawing board

After all the Yunohost tears were wiped away. The steps were pretty clear:

- Install raspiOS headless (this was easy as I have tried this several times)

- Test the services I want to host like the LLM, Jellyfin etc.

- Host via docker to things are running neatly in containers (I’m still a noob on this stuff - but had to figure it out as best I could)

- Test the services on docker and decide what happens next

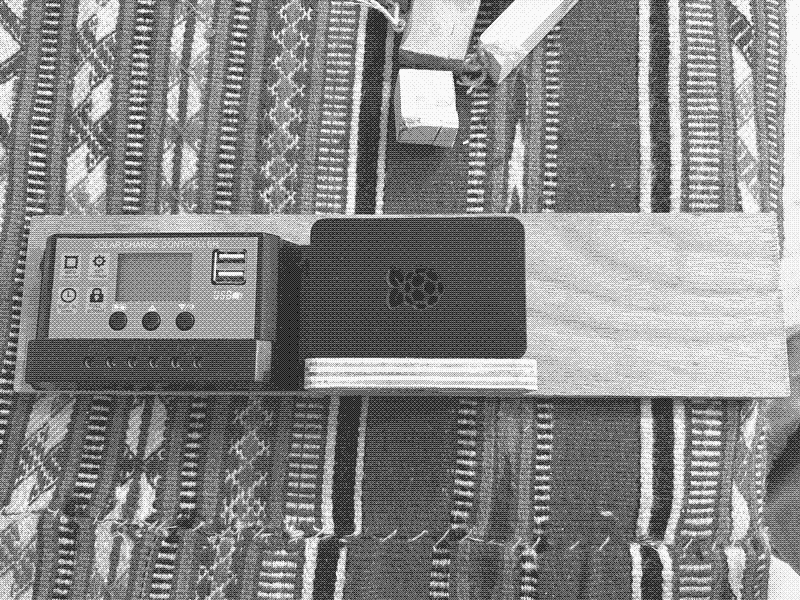

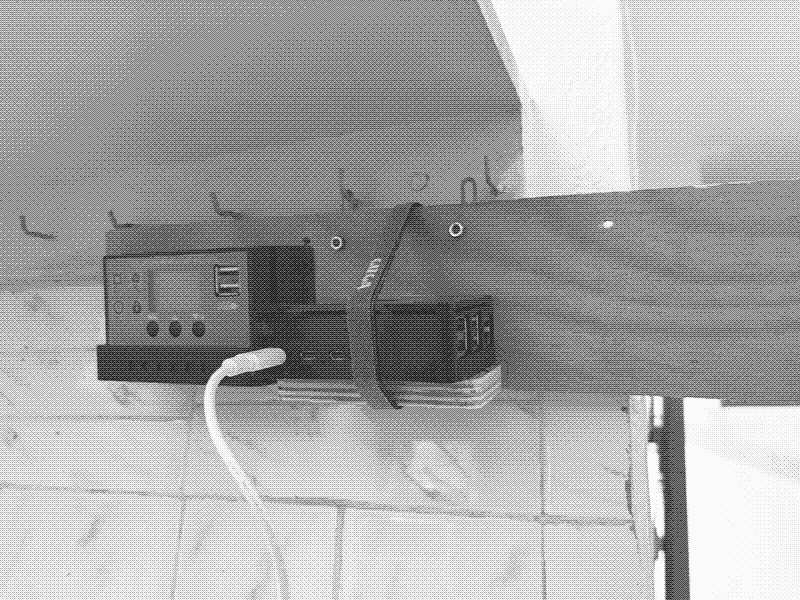

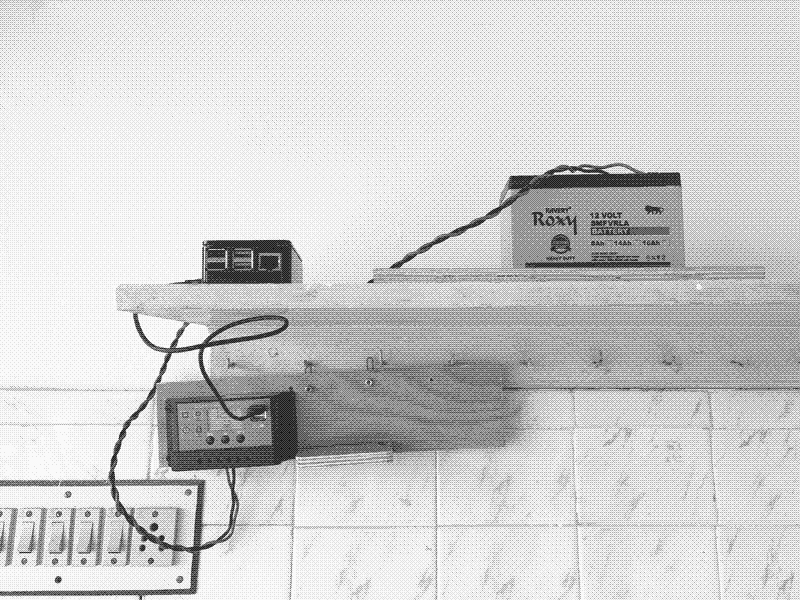

- Take the plunge into solar powering this shindig!

- See where this goes! :)

For this post, I will focus on steps 2, 3 and 4 (Step 5 is still a dream)!

NOTE: All this was done entirely on SSH.

Bro, can you even LLM?

Hosting an LLM on the Raspberry Pi was my main goal. After building Mother Machines v2 last year, I’ve been thinking a lot of local computing. There is something very empowering to discover that your computer (‘daily driver’) can actually generate AI images. Imagine if a tiny computer like a raspberry pi can run a chatbot!

The steps for this were:

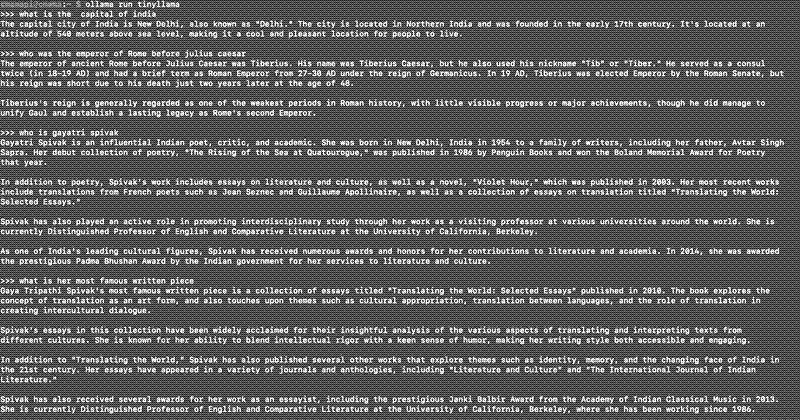

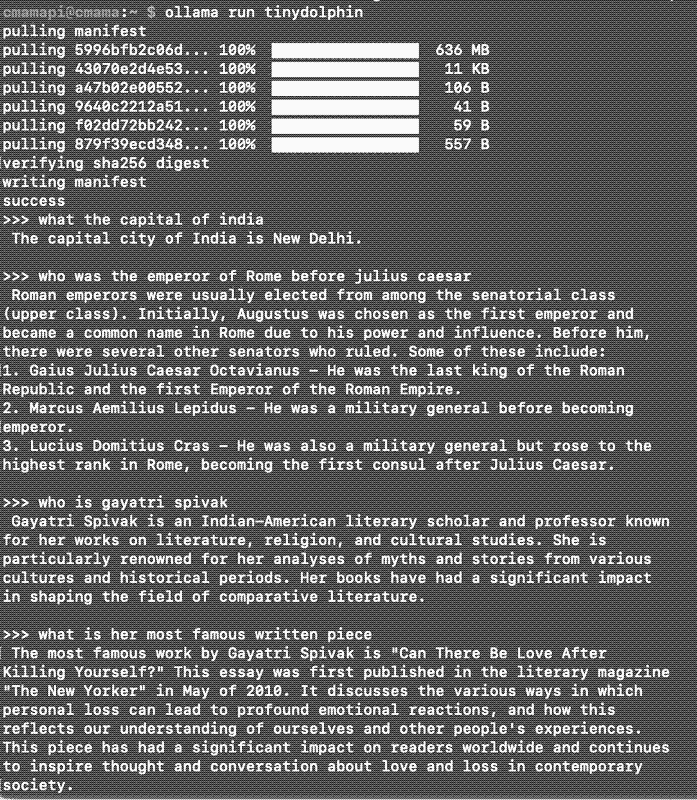

- Run ollama with a simple and smolLM. I used Tinyllama! Choice of the model was PURELY based on the fact that it was called “Tiny”llama.

- Connect to openwebUI’s chat interface

- Test in local network

- Tunnel via Cloudflare!

All these steps worked quite well, but obviously they stop when close the terminal (I’m a forever noob with terminal things)

Trying Docker

I have never used docker but it seemed to be an easy and well documented format for servers and in particular for Raspberry Pi as a server. You can find more about Raspberry Pi hosting here: https://pi-hosted.com/, by the amazing youtuber Nova spirit tech.

You can follow the instructions available on their website/youtube playlist!

Once I tested simpler Docker containers like Jellyfin, etc. I was interested in running Ollama+OpenwebUI as a docker container. There are several tutorials from this but I think the best solution to this was the most obvious one - using OpenwebUI’s README! (credit to sud who shared this link with me!)

This was very exciting - however I had no active cooling on my raspberry pi 4, and it was overheating the board (could smell some plastic burning!).

I’ll write more about the solar connection in the next post.